Premium earbuds always have the obligatory “AI adaptive noise cancelling” feature. Like, marketing teams absolutely love throwing around terms like “intelligent sound processing” and “machine learning algorithms.”

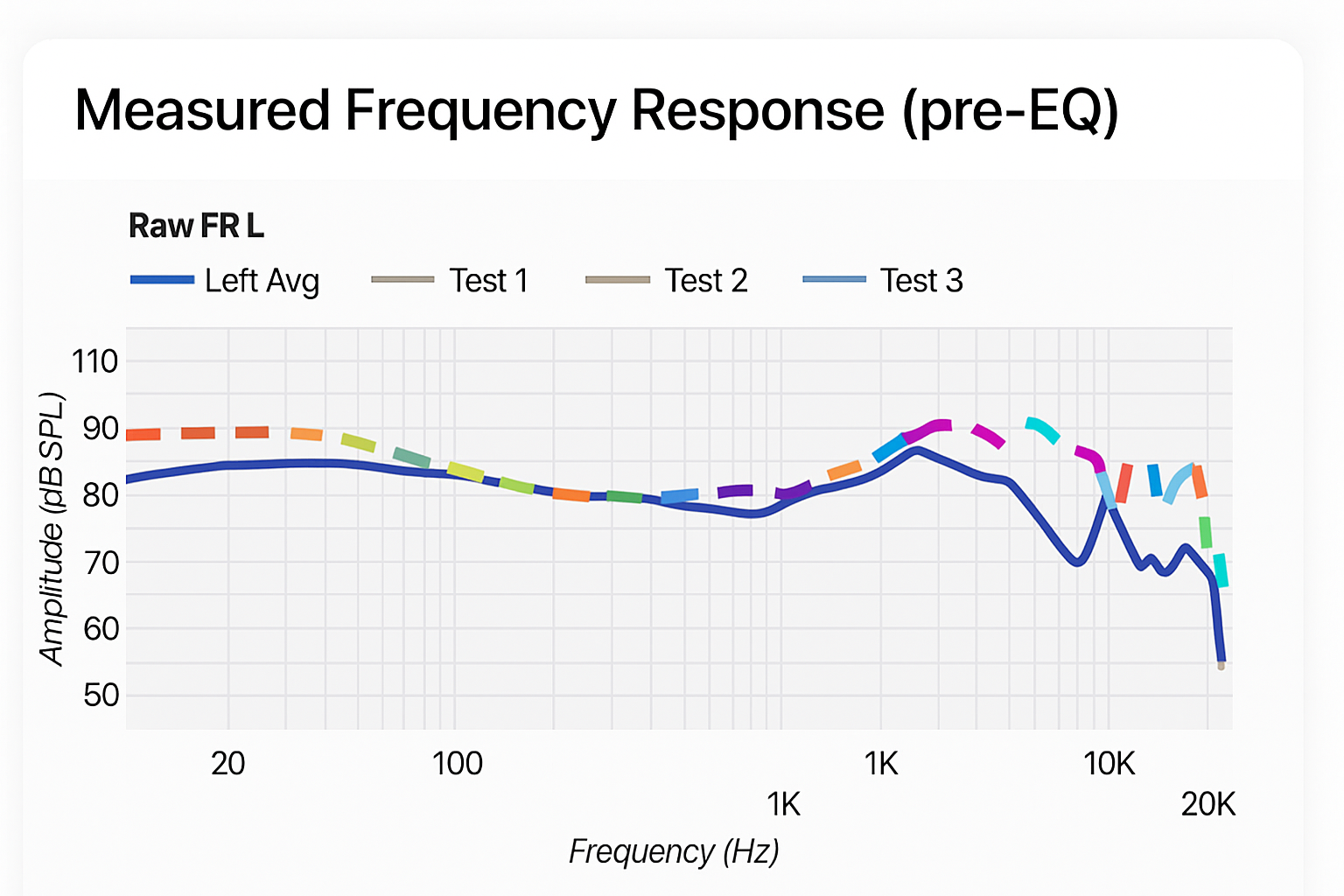

Thankfully, dedicated audiophile websites provide a slew of technical lab measurements that you can dig into. This way, you can tell which ones are hype and which ones are real. Oh and, we are also incidentally showcasing the individual methodologies of these reviewers whenever relevant.

Table of Contents

Scrutinizing AI ANC via Different Lab Measurements

Adaptive noise cancelling should be capable of adjusting itself to something measurable. After all, the keyword is “adapt,” remember? As such, we expect these supposedly AI features to show up in frequency response charts, noise isolation graphs, and environmental testing data. For this article, we chose the following reviewers:

RTings – standardized tests via controlled environments. Very consistent style and even lets you compare products directly, as the reviews are always presented as a set of products.

Headphones.com – focuses on real-world measurement scenarios, showing how these features perform when you actually use them.

SoundGuys – attempts to quantify marketing claims with hard numbers. A lot closer to RTings, but with a deeper focus on just the main product itself.

Headphonecheck.com – dives deep into frequency response measurements that expose what algorithms are really doing.

Now, to address the elephant in the room: why only these four? Why exclude Crinacle, for example? The intention of this article is simply to show the basic technicalities of considering lab measurements when evaluating overhyped features, specifically AI adaptive noise cancelling. Again, nowadays there’s a lot of different tech for AI dirty talk popping out left and right in the consumer segment, and some of them, if not all, need to have a reality-check on their claims.

As you will see, each reviewer represents a different aspect of lab measurement for quantifying AI ANC performance.

Four Earbuds, Four Different Approaches

The key is understanding what each reviewer prioritizes. Blatantly obvious, yes. But some do indeed focus on consistency, others on real-world performance, and these variables are important for you to see the bigger picture for evaluation:

RTings’ Review of the Sony WF-1000XM4

Their standardized methodology showed the team’s noise isolation data with a consistent 12-15dB attenuation across the 20Hz-1kHz range at real-time environmental adjustments. The completeness of the charts and explanations proved beyond a doubt that the V1 chip works, even if it is a simple BT chip and not a dedicated AI processor as hyped.

This means that Sony’s implementation actually analyzes incoming audio and adjusts processing accordingly. All thanks to the masterful isolation of variables of RTings.

Headphones.com’s Review of the Apple AirPods Pro 2

The real-world testing approach used by Headphones.com under varying (practical) conditions demonstrated that the frequency response literally changes based on fit depth and listening conditions. Their IEC-711 coupler measurements show the Adaptive EQ adjusting low and mid frequencies in real-time. And no, this isn’t just volume compensation. The system monitors what you’re actually hearing via inward-facing microphones and adjusts the frequency curve accordingly.

The H2 also powers Adaptive Transparency, which processes environmental sounds 48,000 times per second. You can measure this, as loud sounds above 85dB get smartly adjusted down while preserving important audio cues.

SoundGuy’s review of the Bose QuietComfort Ultra

SoundGuys measured 34dB of noise reduction below 400Hz after the algorithm analyzes your specific ear shape via its CustomTune buzzword tech. The system seems to calibrate itself during initial setup, using acoustic measurements to optimize ANC performance for your anatomy.

Indeed, it looks like Bose actually maps your ear canal acoustics and adjusts processing accordingly, as advertised. SoundGuys, using a similar approach to RTings, showed that CustomTune lives up to its signature profile name. It apparently solved the inconsistent ANC of other products with an active measurement-based adaptation.

Headphone Check’s review of the Sennheiser MOMENTUM True Wireless 4

Sennheiser never claimed AI processing. The product has always been marketed as classic “adaptive noise cancellation,” albeit with algorithmic adjustments. But shoppers might assume all “adaptive” features are AI-powered. Headphonecheck.com’s data confirms this.

The frequency response of the review shows traditional algorithmic adaptation, as it detected noise levels and adjusts ANC strength accordingly. The Sound Check feature can then personalize EQ through app testing, creating measurable differences in output.

As you may know, adaptive ANC works well in steady environments. So this earbud would struggle with rapid changes. This puts the product in stark contrast with the dynamic processing in Sony’s V1 or Apple’s H2 systems, and a perfect foil example to our findings and methodology comparisons.

A Lesson of Results

These four measurement approaches expose the difference between genuine AI implementation and traditional adaptation. RTings’ standardized testing reveals which systems work consistently across controlled conditions. Headphones.com’s real-world focus catches dynamic behaviors that lab environments miss. SoundGuys’ scientific doggedness quantifies claimed improvements with hard data. Headphone Check’s technical analysis shows that ANC with seemingly AI variability is not actually AI.

Again, it sounds very obvious, but no single reviewer tells the complete story. Sony’s V1 processor excels in RTings’ controlled tests but might behave differently in chaotic real-world environments. Apple’s H2 chip impresses in dynamic testing but may still have unintended effects for more specific scenarios. And Sennheiser’s traditional ANC serves as a “gotcha” moment for reviewers that should clarify firsthand that it is not AI-related.

If a reviewer can’t demonstrate adaptive behavior with actual data, you’re probably looking at rebranded static processing. The best implementations, like Sony’s V1 processor and Apple’s H2 chip, produce measurable differences that multiple reviewers can verify.